Testing for latency

Latency is an often forgotten concern when it comes to website performance. There are ways to testing your current site for a variety of performance settings, including customising latency.

Recently I’ve been working on a talk for the MobX Conference in Berlin, Germany. The talk was originally going to be around building for performance and focussing on the approach to building responsible responsive websites. The reason is that I wanted to keep performance on the front of everyones minds and

ensure that the traditional argument that responsive design sites are too slow for mobile could be debunked. While I ended up changing tact with the talk to be a more universal talk I still spent time looking at the issues of poor performance and how to overcome that.

The only way that you can truly overcome something is by having a good understanding of the problem at hand, and this in turn allows you track and test the challenges at every angle. When it came to responsive design and performance there were a few different areas I was looking at during the talk

including preconnect, prefetch, prerender, critical CSS and latency (plus a few more that didn’t end up making the talk).

Latency is one area of web performance that never seems to get a lot of attention, perhaps because the simple fact of latency can not really be changed.

What is latency?

Lets defer to the all knowing google/wikipedia…

Latency is a time interval between the stimulation and response, or, from a more general point of view, as a time delay between the cause and the effect of some physical change in the system being observed.

In web speak it is the round trip time between you requesting a http request and the returning answer. The longer the request takes to get an answer… the longer the latency. This has no bearing on whether you’re requesting a 10kb HTML document or a 5MB image… we’re talking about the time it takes from the start of the request until you get an answer. In the case of the HTML document and image request the latency will be the same for each of them (assuming they’re on the same server) however the image download will take longer, however the download time is down to connection speed/bandwidth.

Front end performance testing tools

I like to use a variety of testing tools when looking at the performance of sites, each testing tools is better at doing one thing or another and I like a good even spread of results to ensure you’re taking everything into consideration.

In my work on the talk I was able to test and retest the results to show the improvement for preconnect, prefetch, prerender, and critical CSS but I wasn’t really able to track down latency issues. The tools I use for testing include

Web Page test is the only one of this toolset that give you a changing value of latency during the testing, however by default the way they do this is by offering some standard options that you would normally find in standard website usage. In these connections you get two bits of information… the bandwidth speed in Mbps or Kbps (download/upload speeds) and the RTT speed (Round Trip Time… otherwise known as latency) in milliseconds

- Cable — 5/1 Mbps 28ms RTT

- DSL — 1.5 Mbps/384 Kbps 50ms RTT

- 3G Fast — 1.6Mbps/768 Kbps 150ms RTT

- 3G — 1.6Mbps/768 Kbps 300ms RTT

- 3G Slow — 780 Kbps/330 Kbps 200ms RTT

- 2G — 35 Kbps/32 Kbps 1300ms RTT

- Dial up 56kb modem — 49/30 Kbps 120ms RTT (it would be awesome if they also broadcast the old modem dialup sounds with this too).

The trouble I had was that I wanted to show the difference between a 5MB connection when it had 50ms of latency and a 10MB connection when it had 500ms of latency so that I could show a larger bandwidth is not necessarily faster if the latency is worse.

Customising Web Page Test options

Thanks to an incredibly helpful Andy Davies I was pointed in the direction of the “Custom” options with Web Page Test.

These allow you to specify and number of different areas for your tests. Lets take a look at two of them, latency and bandwidth, in a little bit of detail.

Bandwidth

Bandwidth is something that we are mostly aware of because it is the thing that we place the most importance upon, it’s most often referred to as download speed.

We’ve been conditioned to believe that the faster the download speed, or the bigger the pipe for the data to travel through, the faster the experience we will have.

This is very true for downloading movies because we have a single request for a file and the more we can pour down the pipe the faster we will download it.

Bandwidth = how much data or information can travel down the network at one time. Imagine a crowd of people walking along the footpath. If that footpath is 5 meters wide then lots of people can move down the footpath at one time and there will be very little delay. If the same number of people suddenly

had to move onto a path that is only one person wide it will take longer for that crowd to reach their destination.

Latency

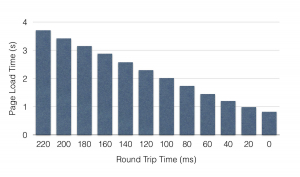

Latency is something that we’re less aware of as an industry. While we spend all our time worrying about the bandwidth and the size of our assets it is in fact the latency that can make the biggest improvement to site speeds.

Latency is the amount of time that it takes for the request to go from your browser to the server and back again… also known as Round-Trip-Time (RTT). Even though we can try and improve the speed of our websites as much as possible by reducing the size of our files (and therefore the less bandwidth we need) another option is to reduce the time it takes the request to take place.

Fixing Latency

If you have a latency problem you have a two options.

- Find a way to travel faster than the top speed in the universe — otherwise known as the speed of light; or

- Reduce the distance (and therefore time) between the user and the server

… which pretty much means you have one option.

This can be done by using a content deliver network (CDN) like Fastly or Cloudflare. These services essentially serve your website from multiple locations around the world, so when someone requests a page they get it from the closest possible place (and therefore the fastest).

Conclusion

This is more of an article about how to test for Latency and one solution for making your website latency faster. There is an entirely different and much more complicated layer that goes into this when you’re looking at mobile connections to nearby towers, or taking into consideration the http2 specification and the new standard on which the web will be working. These are much more detailed discussions which deserved their own post.

For now you can use the custom options in Web Page Test and ensure your sites are performing great across a variety of configuration setups.